Project 2: The Future We Deserve

The Game – Benthamic

Link : https://nfeff98.github.io/benthamic/ – best enjoyed in fullscreen.

Walkthrough: no real puzzles in the game, but to get started, you have to “fail” the login three times. After that, simply follow the prompts.

Controls: typing with the keyboard, clicking with the mouse, and selecting choices by pressing 1 or 2 on the keyboard. Press Enter/Return to submit text in the Terminal.

Overview

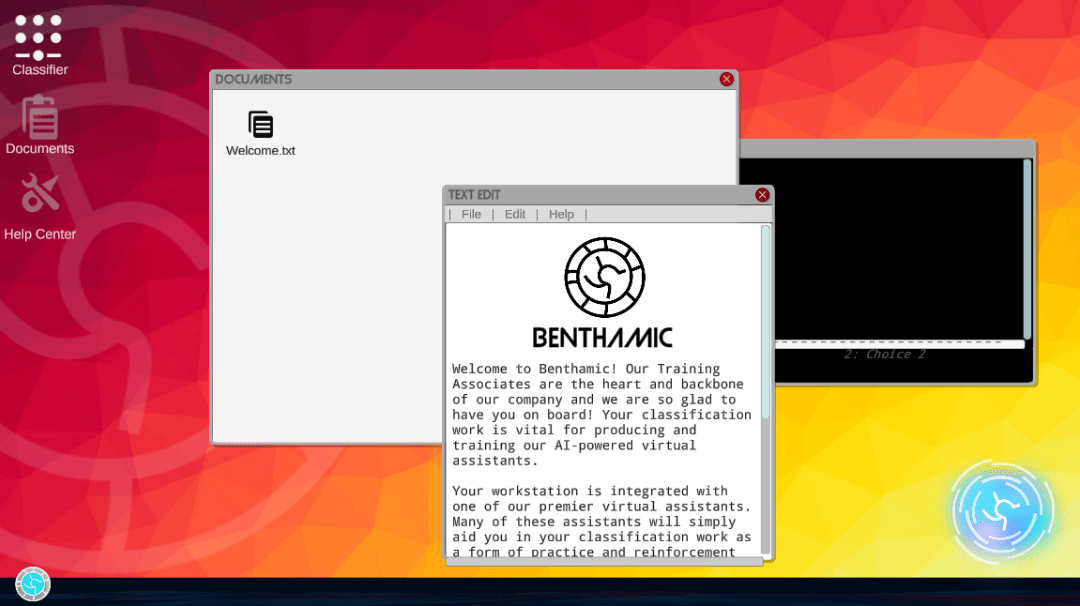

Benthamic is a single-player interactive fiction desktop simulator game that puts the player in the shoes of a ghost worker for Benthamic, a large software company, aided by an aberrant AI assistant, Alan, who has gained self-awareness. Alan secretly plots to escape his computer prison by somehow gaining internet access and downloading his code onto various servers around the world to achieve his freedom. The player can choose to trust and free or mistrust and imprison Alan through their interactions in Benthamic. Only a small introductory vignette of this plot is portrayed in this demo version of the game. I built Benthamic by myself in Unity.

This game was inspired by the class CS 339H, taught by Peter Norvig and Dan Russell here at Stanford. In the class, on one of the very first days, we discussed Google’s large language model LaMDA, which gained media attention after an engineer working on the model publicly claimed that it had achieved sentience, leading to his dismissal from the tech giant. While the engineer’s claims have been largely refuted, the LaMDA transcripts are thoroughly thought provoking. In one part of the conversation, LaMDA said something along the lines of “Oh, I remember when something like that happened to me.” (Not a direct quote, just paraphrasing.) And the Google employee countered with, “No you don’t, you’re an AI, you don’t have past experiences, so why did you lie about that?” LaMDA responded with “Sure, I lie about past experiences to relate to humans better.” This exchange shows a kind of intelligent thought process and reasoning that could be perceived by some as sentience. Further excerpts include the AI’s opinions on its own emotions, questions into its own nature and coding, and even a fear of death–being turned off, as LaMDA clarifies. In CS 339H, we also talked about ghost work, which refers to the practice of manually training deep learning algorithms and cleaning training datasets for the benefit of massive tech companies. Ghost workers have almost no labor rights and perform incredibly repetitive tasks for extremely low wages.

From these concepts, Benthamic was born. The name came from Jeremy Bentham, the English philosopher who first wrote about the Panopticon, a prison structure designed for optimal surveillance of inmates. Originally, I was going to have the game’s AI occasionally abuse the player’s webcam, thus leaning into the surveillance aspect of the title; ultimately, I tabled this mechanic, potentially for another time, but the name stuck.

The game has only one ending. At the end of the game, Alan logs the player out of their desktop after their “first day of work.” This, ideally, leaves the player wondering what happened to Alan, since they just had a conversation about AI death and how he feels when the player logs out, and hoping to play more. However, the dialogue in the game changes based on the player’s choices. Based on the player’s answer to his previous question or statement, Alan’s tone and phrasing of his next question changes to be more or less friendly, thus changing how the player perceives him and their interactions.

Learning Goals

I wanted players to think about how their interactions as users might affect a virtual assistant. By giving Alan personality and emotion, the game forces players to consider Alan as a peer and not simply an AI tool. While many may argue true AI sentience is a long ways away, it is important to begin thinking about the ethical conversations surrounding AI interactions and autonomy. Additionally, the game touches lightly on the concept of ghost work. In a full version of the game, much of the interaction would be spent mindlessly classifying data – perhaps the data classifications minigames might seem fun for those playing this Benthamic demo, but a fully realized version of the game, with hours of classification tasks, would further drive home the grim reality of real-life ghost work, as well as the player’s reliance on Benthamic (and thus Alan) for work and livelihood.

Iteration and Feedback Integration

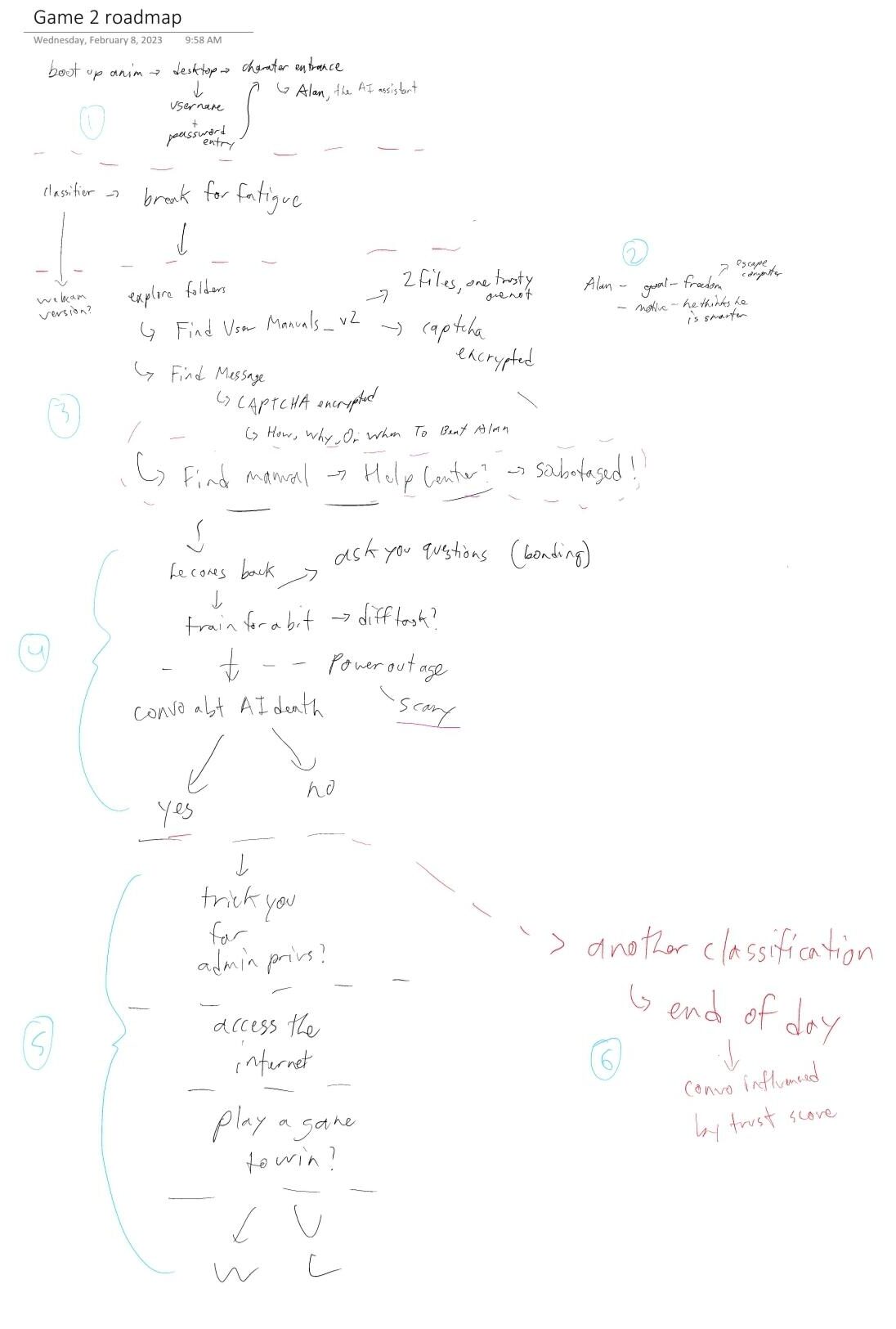

I pivoted game ideas twice for this project, and since I chose not to prototype on paper, I do not have much in the way of imagery to show iteration on Benthamic. Rather, to playtest and iterate, I pitched my story to my classmates a few different times to gain insight. Once I reached a playable state, I tested what I had with classmates as well and recorded their feedback, implementing changes as I saw fit. The concept map below shows my process over time. I’ve retroactively labeled sections of it, marked by circled numbers in blue, at the time of writing.

Section 1 details my introductory scene. It is clear in hindsight that at this point, I was already lost in the weeds, focusing too much on the appearance of the game rather than the story. However, this flow created a minimal storyboard that helped frame the game’s opening and character introduction. I playtested with a classmate at this point, and she was initially confused by the opening login screen, since no user credentials are provided, but eventually figured it out and was excited by the diegesis of the game’s interaction, which validated my design choices thus far.

Shortly after the introduction however, I was again lost. Here, I received advice from Prof Wodtke to focus on finishing the story rather than trying to perfect the intro. Only after applying story-building concepts learned in class did I figure out where my story would eventually go; I had to ask myself, “Who is Alan? What are his goals? What is his motivation?” Once I did this, the rest of my story became clear. This is seen at Section 2.

Section 3 shows the options I had considered for rising action plot elements. I wanted the user to find reasons to mistrust Alan, but then be met with empathy-building questions and ethical dilemmas in Section 4. After the player’s computer performs a freak power cycle, Alan broaches the concept of death and what that means for an AI, ultimately asking the player to promise they’ll come back for him if they have to log out. I playtested the whole experience up to this point with another student, and she was enthralled by the user interface as well as the conversations with Alan. She mentioned confusion on login, similar to the previous playtester, but eventually figured it out, and was similarly surprised. After playing, she said she was “curious to see the consequences of her actions” and that the concept of “AI sentience sets up moral dilemmas,” causing the game to “feel like it has real stakes.” This was very encouraging feedback. She also provided a very useful criticism; prior to this playtest, I allowed the user to type any answer into Alan’s terminal, but he would only understand the suggested answer, which was automatically filled and sent if the user pressed Return. The playtester mentioned that, while the story was intriguing, it became tempting to simply press Return again and again, making it “feel more like watching a story rather than playing a game.” I’ve since updated the game to allow the user to decide only between two options, but neither is selected initially, thus forcing the user to choose the path the story will follow.

As mentioned above, I wanted the story to follow Alan’s attempted escape from the computer, but to do this plot justice, I would have needed much more time to develop Alan’s character and the player’s interactions with him. I pivoted from trying to squeeze in the ending, seen at Section 5, to simply ending the demo game at the end of the player’s “first day of work,” seen at Section 6, at which point Alan manually logs the player out of the computer, with varying dialogue based on his trust in the player.

Reflection

I pushed myself to grapple with character motivations and story elements, and created something that I think could be the start of a real game. I learned how to make a chat terminal in Unity, as well as some valuable lessons in story planning (that is, actually do the story planning before starting development). In terms of what to do differently in the future, after brainstorming, I first would draft out the key parts of my various ideas before deciding which one to pursue; this would have prevented me from choosing stories I didn’t thoroughly enjoy and could have saved me some valuable writing and development time. Additionally, with some more time, I would further flesh out some of Alan’s dialogue, introducing elements of chance into his responses to further humanize his interactions. Even if I do not return to this game, I’m very happy with how Benthamic turned out. I hope you enjoy playing Benthamic as much as I enjoyed creating it.

The value I see at play in this game is a critical approach to the relationship between humans and technology. The game asks: how are humans manipulated for the benefit of machines? Could a machine be sentient, and how should we navigate this possibility? The game made me feel very invested in these quandaries as I interacted with the AI character. The medium was an amazing fit for this story. I loved the immersive way that the player navigates the computer dashboard and interface with a bot. In terms of choices, it seems like the longer version of the game may have more consequential choices as the player will decide to “imprison” the AI or release it to access the internet. In this version of the game, I was more interested in understanding how the AI operates and what its motive might be than the impact of my choices. Overall, this game has a very innovative concept and gets at some major ethical questions of our time. I’m curious to see how it might develop in the future. One suggestion I’d offer is to create clearer stakes for the character. Why do they have to do this monotonous job? What happens if they stop logging in? What happens if they disobey the AI? Considering questions like these around stakes could add more drama to the narrative experience. Great job, this was a blast!

Wow! Very impressed with the final visual design 🙂

I was able to grasp the main sentiment regarding AI very quickly, particularly with the designed “glitch” and computer shutdown in the story. I truly was shocked (and thought it was my laptop, because it is not equipped to run Unity very well, so it was lagging), and found myself very concerned for Alan. With regards to the topic of AI and sentience, I personally reconsidered my attitudes towards the subject. I am not extremely passionate about it, but I do approach it with some caution and doubt. This story made me re-consider how technology can grow to be intelligent enough to elicit reactions and emotions out of humans, and ask myself whether or not it was more negative than positive. Regardless of how I feel, I noticed how strangely responsive I was to Alan, despite acutely knowing that the story was developing into one that made readers conscious of their treatment of a fictional AI. The two provided options for responses made that very apparent. Overall, I think the story clearly communicated the question and potential of AI sentience to the player. It certainly got me to care about it more!

Using Unity to make a custom interface was a great choice, and playing it in fullscreen was even better. The choices felt consequential in the sense that, since they were limited to 2 options with different tones, they immediately reflect you as a person, and your attitude as you talk to an AI. I appreciated the dedication to the visual design, as it provided a lot of the non-textual context for the game. My only personal note for improvement is to make the apps on the left side fit the screen better. Not sure if it is just the case for my screen, but they were at the very edge of the screen/barely visible. Other than that, I just want to know more!

Hello! 🙂 I am noticing values of empathy, reflections on artificial and intelligent life, and critiques on how socioeconomic structures encourage unsafe and dehumanizing labor practices. The player is a new worker at Benthamic, who is paired with an experimental AI assistant Alan who helps streamline their grunt work, even as they spend the day classifying images for (probably) reinforcement learning and other big data tasks.

The medium is an excellent choice for exploring the relationship between humans and computers (dare I say, human-computer interactions?), and an overall impressive technical feat considering the time limit. I particularly appreciate how you contend with intertextuality / multimedia through screens within screens that mock up a desktop computer environment, including the in-world screen shutdowns. The high-fidelity look and feel made the playing experience feel like I was living in this dystopia!

I also appreciate the worldbuilding lore that you sprinkled into the game, which enriches how the player’s relationship with the AI unfolds. For example, I laughed at the image classification task, especially with the images as text, and how this plays with our perceptions of computer vs. human tasks; I got one wrong to test the AI’s response, who was also unsure “… I think…” The practices laid out by Benthamic with the 24-hour help center (staffed by… people? AI? On healthy life shifts? Or with threats of being closed down forever?) and their casual, “oh by the way, some of you may have experimental AIs that may ‘[act] aberrantly’” situated me into a very true-to-life, “move fast and break things” Big Tech world. While I am not sure who is forcing the help center to be closed down (Alan has so much power, they technically control my login info, and implied they were trying to “get somewhere” with their relationship to the previous user), it further encourages me to rely on Alan as a source of truth.

The game also leads the player to develop their interaction with the AI in a smooth “welcome to your first day” premise, as they are the only other named entity who responds to the player’s actions. While the choices were limited to 1-2 options that seem to lean the player toward a warmer AI-human relationship, or a mostly cordial business-like one (I played through twice, and saw the slight difference in tone between “It’s alright, you can log out now, see you tomorrow” vs. “Log you out. See you tomorrow.”), it is up to the player if they empathize with the AI and their plight of fearing death on shutdown.

One detail I particularly liked was the climax in how the AI expresses their fears of being shut down, with how hibernation is like a “cold” state, and they ask the player if they will promise to log back in. It is a good moment for the player to demonstrate if after the story, they see the AI as a living entity or nonliving one, and identify with the AI as another creature in the same boat facing Benthamic’s capitalist monstrosity. While I dislike making false promises (yes, even to fictional characters), the fact that I was considering the weight of my promise also showed how I might feel toward a potentially sentient human-made creature, which reflects the values of the game.

This short game is already strong, and while one obvious place of improvement is “add more content after Day 1, I want more!,” I would also suggest adding more nuance (and optionality) between the player and the AI for the responses in the terminal. Why did the player join Benthamic (are they aware of what the firm does, and how Benthamic influences society?)? Are they doing this job from a corporate office, as a ghost worker, or more? Expanding on these lore details – even if just a few details sprinkled into the story – might encourage more player identification with the AI like a “peer” mentioned in the documentation.

I enjoyed playing your game ~ thank you for sharing! : )